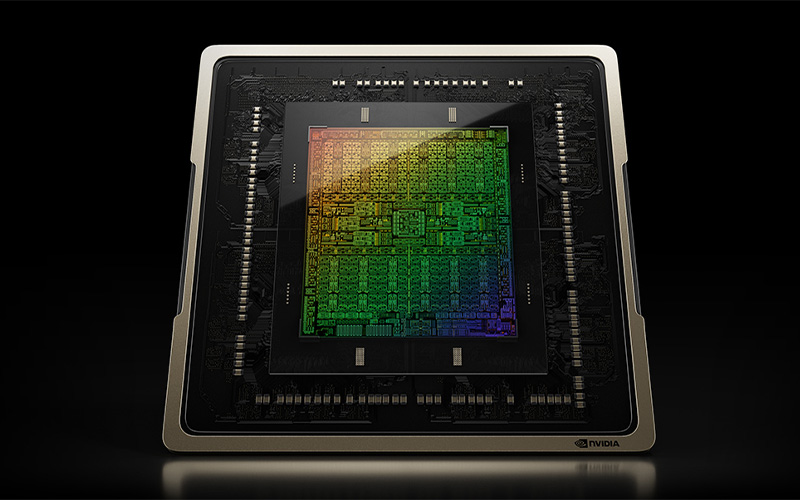

The NVIDIA L40, powered by the Ada Lovelace architecture, delivers revolutionary neural graphics, virtualization, compute, and AI capabilities for GPU-accelerated data center workloads.

The NVIDIA L40 brings the highest level of power and performance for visual computing workloads in the data center. Third-generation RT Cores and industry-leading 48 GB of GDDR6 memory deliver up to twice the real-time ray-tracing performance of the previous generation to accelerate high-fidelity creative workflows, including real-time, full-fidelity, interactive rendering, 3D design, video streaming, and virtual production.

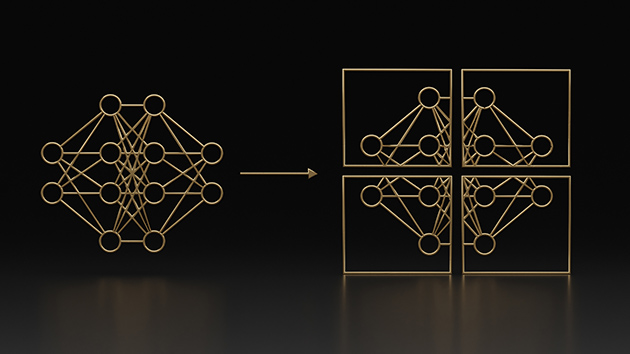

The NVIDIA L40 includes groundbreaking features to accelerate a wide range of compute-intensive workloads running in the data center, including training, inferencing, data science, and graphics applications. The latest fourth-generation Tensor Cores deliver enhanced AI capabilities to accelerate visual computing workloads and deliver groundbreaking performance for deep learning and inference applications.

The NVIDIA L40 is designed for 24x7 enterprise data center operations and optimized to deploy at scale. With enterprise-grade components, power-efficient hardware, and features like secure boot with internal root of trust, the L40 delivers the highest levels of performance and reliability for data center workloads. Packaged in a dual-slot power-efficient design, the L40 is available in a wide variety of NVIDIA-Certified Systems from the leading OEM partners.

As the engine of NVIDIA Omniverse™ in the data center, the NVIDIA L40 brings powerful RTX and AI capabilities to power workloads like extended (XR) and virtual reality (VR) applications, design collaboration, and digital twins. For the most complex Omniverse workloads, the NVIDIA L40 enables accelerated ray-traced and path-traced rendering of materials, physically-accurate simulations, and generating photorealistic 3D synthetic data.

Running professional 3D visualization applications with NVIDIA L40 enables creative professionals to iterate more, render faster, and unlock tremendous performance advantages that increase productivity and speed up project completion. Artists and designers can work in real-time with complex geometry and high-resolution textures to generate photorealistic designs and simulations and power high-fidelity creative workflows.

When combined with NVIDIA RTX™ Virtual Workstation (vWS) software*, the NVIDIA L40 delivers powerful virtual workstations from the data center or cloud to any device. Millions of creative and technical professionals can access the most demanding applications from anywhere with awe-inspiring performance that rivals physical workstations—all while meeting the need for greater security.

Powerful training and inference performance, combined with enterprise-class stability and reliability, make the NVIDIA L40 the ideal platform for single-GPU AI training and development. The NVIDIA L40 reduces the time to completion for model training and development and data science data prep workflows by delivering higher throughput and support for a full range of precisions, including FP8.

The NVIDIA L40 takes streaming and video content workloads to the next level with three video encode and three video decode engines. With the addition of AV1 encoding, the L40 delivers breakthrough performance and improved TCO for broadcast streaming, video production, and transcription workflows.

| Architecture | NVIDIA Ada Lovelace Architecture |

| CUDA Core | 18,176 |

| Tensor Core | 568 |

| RT Core | 142 |

| GPU Memory | 48 GB GDDR6 with ECC |

| Memory Interface | 384-bit |

| Memory Bandwidth | 864 GB/s |

| Max Power Consumption | 300W |

| Thermal Solution | Passive |

| Graphics Bus | PCIe 4.0 x16 |

| Display Connectors | DP 1.4 (4)*; Supports NVIDIA Mosaic and Quadro® Sync |

| Form Factor | 4.4” H x 10.5” L - Dual Slot |

| vGPU Software Support | NVIDIA vPC/vApps, NVIDIA RTX Virtual Workstation (vWS) |

| vGPU Profiles Supported | See Virtual GPU Licensing Guide |

| Interconnect Interface | x16 PCIe Gen4 (no NVLink) |

| Power Connector | 1x PCIe CEM5 16-pin |

| NVENC | NVDEC | 3x | 3x (includes AV1 encode and decode) |

| NEBS Ready | Level 3 |

| Secure Boot with Root of Trust | Supported |

* L40 is configured for virtualization by default with physical display connectors disabled.